Will Artificial Intelligence be the Death of Photography?

We have a new religious war brewing in the photography world: the emergence of Artificial Intelligence tools to assist (or perhaps plop into the driver’s seat) of your digital darkroom post processing. And as you might expect, there are people out there claiming this will be the end of photography, or of creativity in photography, or, um, it’s new, it’s different, and so by definition, it’s bad.

This, of course, is just the latest round of the “it’s not photography unless you do it exactly the way I want it to be done” school of thoughtful, intelligent discussion. Previous versions of this include:

You must get it right in camera and not post-process or it’s wrong

HDR is wrong (okay, in the early 2000s, there was a lot of bad HDR out there, but once we learned how to use it well….)

Cloning is evil

You must shoot in manual mode

Auto-focus is a tool for lazy photographers (so is burst mode)

Digital Photography is evil — photography’s true being is with film

And so on… In other words, in the eyes some some groups of self-defined standards setters — innovation is bad. Change is bad. We should all return to the core roots of photography, which is why I’m throwing out my digital gear and am setting up a nice daguerreotype lab in 2021.

Yeah, right. Not really.

One of the first problems with this fight is, of course, that even trying to define “A.I.” is complicated. One of the more thoughtful takes I’ve seen on this is by photography Richard Wong, and is well worth a read.

Let’s dig into this a bit. Since this is my blog, I get to play judge and jury, and here are the witnesses pro and con:

Witness for the Prosecution

Witness for the Defense

AI’s Role in the future of Photography

When we start talking about AI and Machine Learning in photography, the above are talking about our digital darkroom post-processing tools, and we aren’t even touching the area where this has had the most impact — our phone camera, where both Apple’s IOS and Google’s Android have made major strides in image quality via software instead of hardware innovation. A good piece to get a sense of just what your phone is doing behind the scenes to get you the great images it creates is from the developers of the IOS App Halide: Understanding ProRAW. Lost in some of the arguing over A.I. in the digital darkroom is the fact that the darkroom tools are adopting in these enhanced software systems to try to keep up with what the phones have been doing so they stay remotely competitive.

I’ve been grappling with how these tools fit into my own philosophy and workflow this year. About a year ago, I dug into Luminar 4 by Skylum and I ultimately decided that Luminar’s focus on creative aspects of post processing was not for me and removed it from my system — I never was able to decide if Luminar was giving me the best version of my image, or if I was creating an image that was really Luminar’s idea of a good image. It just wasn’t the right tool for me.

More recently, I’ve been experimenting with some of the tools from Topaz Labs. Specifically, I’ve gotten myself copies of the DeNoise AI and Sharpen AI tools, as well as their Gigapixel AI for growing the pixel size of images. These tools interested me for a few reasons:

I’ve always struggled at sharpening; I would say at my best today I’m — okay. I’ve gotten better, and when I go look at images I processed 5+ years ago I often wince as I reset and reprocess. It seemed to me a good tool that understood what and how to sharpen would benefit me. Added bonus: they claim they can improve the sharpness of an image where the focus point just misses the key feature like the eyes, which might make a marginal image usable or better, and I wanted to try that out.

Noise reduction seemed like an obvious thing Machine Learning could help with, and I’m often shooting at higher ISO in poor light, and while I think I’m decent doing this manually, an automated assist can’t hurt.

Image up-sizing is something specific for my printing; I wanted to see how it might improve my printing of larger prints, and perhaps open the door to really large prints through a lab. The Topaz Gigapixel tool has consistently gotten good reviews for how well it grows an image to a larger size.

I’ll note none of these applications of A.I tools are “creative”, they are specific to handling specific types of post processing actions that are refinements of an image, not necessarily creatively altering them. One of the reasons I went with the Topaz tools — other than a number of recommendations from photographers I trust — is they act as seperate tools as Lightroom Plug-ins, where the Luminar software really wants to own the entire post-production process, even when used as a Lightroom Plug-in. They work the way I want them to — as specific functions that use the A.I. learning to enhance a specific operation.

How do they work? I’ve run about a dozen images through them so far. Sometimes I see absolutely no visible change, as in this image:

I ran it through both the sharpener and the de-noiser and after each, the only changes I could see with the naked eye were some sharpening improvements in the clouds. There were definitely changes visible at the 100% view, but from the point of a perceptible improvement, there was none. This is am important point to remember: not all images need these tools, although I haven’t seen any indication they damage an image so using them routinely seems harmless when using normal settings.

This image, however:

If you look at the eagle’s eye you’ll see the focus point is just a bit off. Enough to really annoy me but not enough for me to reject the image. It was an obvious test candidate for these tools. As plug-ins, they are called via the Edit In menu:

This creates a copy of the image as a TIFF file which is passed on to the tool:

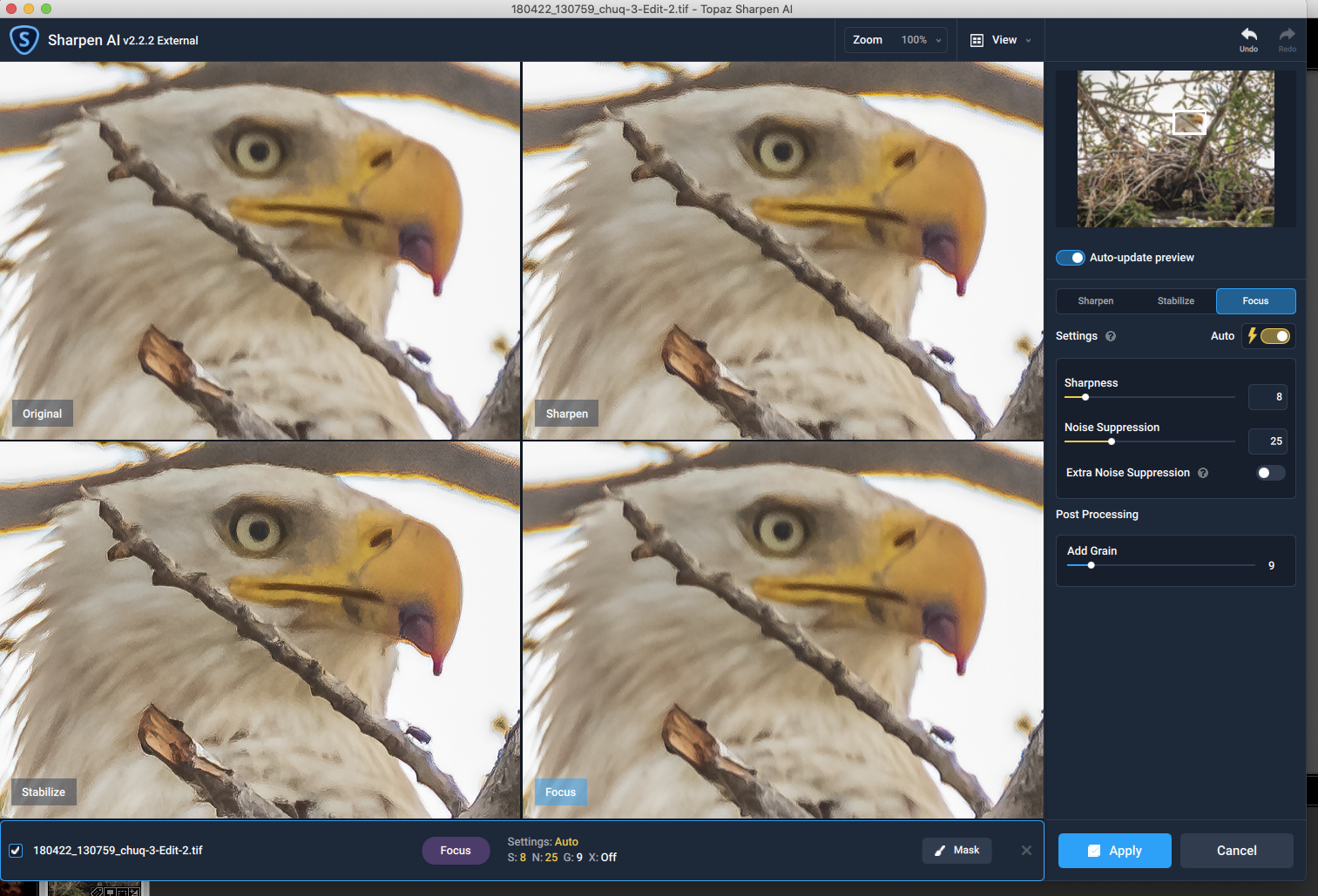

The Sharpen AI tool has three different styles: general sharpening, sharpening to correct for camera shake, and sharpening to improve focus. Here is a look at previews of all three styles plus the original using the auto button settings.

I decided to crank up the intensity and see what happened:

It took a bit over a minute to process:

Looking at those previews closely, it seemed maybe Stabilize improved the image in a better way, so I ran it again:

To my eye, the stabilize mode did improve sharpness of the image more, especially around the eye, but added more noise. So let’s toss that over to the denoise tool:

The Denoise tool has a few modes, too, including the standard one, “All Clear” and Low Light modes. To my eye the general mode looks best. I will note that the Sharpener has created some artifacts here (check above the eye) that will make the pixel peepers crazy, but I don’t find them noticable at normal resolutions. I expect I can tune the tool to minimize that if I work it a bit.

Here is that image post processing it in the de-noiser:

Here are the original image on the left, and the final sharpened, de-noised on the right. click on them to bring them up to compare:

I can’t see a noticeable improvement from the images side by side above, but I do see it in the larger images in the lightbox. All of these images have been resized to 2200 pixels on the long side, my standard for anything posted online these days. On my monitor on the original images, it’s more noticable, but even in the reduced web-sized images, the improvements are there. I haven’t test printed this image yet, but I expect the improvements to be more dramatic on paper than on pixels.

Are Topaz Labs Tools Leepers?

They certainly look like keepers to me. I’m not sure I’ll use them constantly, but I do expect to find enough use for them to make them a good value, especially in the print workflows. I am thinking using these for my sharpening and denoising on most images may make sense, although it’ll add about 5 minutes or so to every image processed by them (at least).

I note I haven’t talked about the Gigapixel AI tool here; I’m just starting to experiment with it with my printer, and I’ll cover it in a later piece once I get a number of images through it and onto paper. My expectations are fairly high, though, given when I’m seeing here.

So, Will Artificial Intelligence will be the Death of Photography?

Of course not. You should ignore anyone who tells you it will, if only because of the fact that A.I. isn’t a thing, it’s a bunch of different tools and technologies. You can (as I have) chosen to not use Sky Replacement on my images — on of the common targets of the anti-A.I. attacks — but that doesn’t mean other aspects of these machine learning based processing tools aren’t useful.

I’d also suggest that many of the people decrying A.I.’s encroachment into desktop image processing tools like Lightroom or Luminar also use their phone cameras on a fairly regular basis, and those people really need to stop and re-think their opinions: what we’re seeing happening implemented here in the desktop tools are things already in common use in the automated phone image workflows and so we’re using them constantly whether you realize it or not. These innovations are bringing them into the realm of those of us serious enough about our photography to put time into post-processing.

We are going to adopt these tools, it’s more a matter of when, not if. And which tools for what purposes. What Luminar is doing is fascinating and some great innovation — even if it’s not for me personally. The Topaz Tools are more what I wanted, and I’m quite happy adopting them.

I don’t think it’s appropriate for any photographer to tell any other photographer how to be a photographer — the quickest way to have me drop you from my feeds is to start explaining that your way of doing photography is how we should all be doing it. Everyone needs to decide what works and what matters to them, and build out their photography and processing workflows from there. The only person I will let tell me how an image can be processed to be acceptable is someone, like a National Geographic editor, who is holding out a check and wants to buy it.

For me, I see the emergence of these tools as a way to improve some areas of my post-processing that I know I’m weak in. Remember when image sharpening meant multiple layers in Photoshop with Gaussian blurs and various pixel tweaks? Most of us happily left that behind when Lightroom’s sharpening tools got good. These tools are that next generation leap forward — not the easy button, per se, but more the let me handle the grunt work button.

I’m all for that — and so you should be, too. So whenever you run into that photographer who opens the discussion with “you have to get it right in camera, or….”, smile, nod and get the hell out of hearing as fast as possible. What matters isn’t what they think is a proper image — it’s what you think. And these tools are simply tools in the toolbox you can adopt, or not, as it makes sense for what you’re trying to accomplish.

What matters is that at the end of the workflow pipeline you are happy with the result.

The rest is noise that gets in the way of that, and I suggest doing your best to tune as much of it out as you can.